The next-gen reservoir computing is about to advance ML’s prediction capabilities

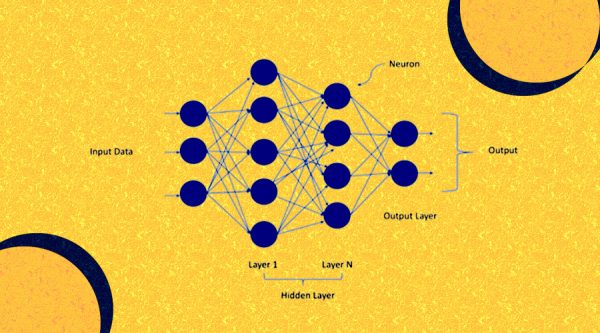

Machine learning has become an advanced part of science that is acting without being explicitly programmed. ML is so versatile today that we might use it thousand times a day, even without our conscious senses. AI, machine learning, and deep learning are sometimes used interchangeably, but ultimately they are not the same. Advancements in these disruptive technologies have made the concept clearer for us. Advances in this technology promote faster and more efficient business intelligence, using capabilities, starting from facial recognition to natural language processing. Advancements in an ML algorithm can be considered and used as individual components that can promote efficiency across all business paradigms. Recently, the introduction of ML’s next-gen reservoir computing has created a new hype in the tech community. This next-gen reservoir computing can be trained to fix some of the most potent and complex business problems.

Researchers from the Ohio State University have recently demonstrated a new way to predict the behavior of spatiotemporal chaotic systems, like the Earth’s changing temperatures. The research was published in the Interdisciplinary Journal of Nonlinear Science and claims that the new algorithm is quite efficient when it is combined with next-generation reservoir computing and can efficiently learn spatiotemporal chaotic systems just within a few minutes as compared to traditional machine learning algorithms. Generally, reservoir computing is considered the best-in-class machine learning algorithm for processing information generated by dynamical systems using observed time-series data.

So, how does the new ML algorithm work?

While testing, researchers tested this algorithm on a complex program that has been worked upon a couple of times. In comparison to traditional machine learning algorithms that can solve the same tasks, the next-gen reservoir computing algorithm is far more accurate and used around 400 to 1,250 times less training data to make better predictions that its counterpart. The method is also quite less computationally expensive and about 240,000 times faster than traditional machine learning algorithms. Traditionally, while solving complex computing problems that required a supercomputer, professionals used a laptop running Windows 10 to make predictions in about a fraction of a second, and quite extremely slow.

Experts have commented that modern machine learning algorithms are quite well-suited for predicting dynamic systems by learning their core physical rules using historical data. But to make accurate predictions of the entire spatiotemporal chaotic system, scientists need to possess more accurate information about specific variables, and the model equations that describe how these variables are related, but, impossible to pre-determine. However, scientists are continuing to make great strides to advance the growth and development of machine learning systems.

Source: analyticsinsight.net