Researchers have developed a technique using a microcontroller that can train ML models in minutes

Researchers at MIT and the MIT-IBM Watson AI Lab have developed ML models that enable training an industrial microcontroller using less than a quarter of a megabyte of memory. Researchers claim to have developed techniques to enable the training of a machine learning model using less than a quarter of a megabyte of memory, making it suitable for operation in microcontrollers and other edge hardware with limited resources.

AI model training on a device, especially on embedded controllers, is an open challenge. A new technique enables AI models to continually learn from new data on intelligent edge devices. The techniques can be used to train a machine learning model on a microcontroller in a matter of minutes, and they have produced a paper on the subject, titled “on-device training under 256KB memory. On-device training of a model will enable it to adapt in response to new data collected by the device’s sensors. Training an ML model on an intelligent edge device allows it to adapt to new data and make better predictions.

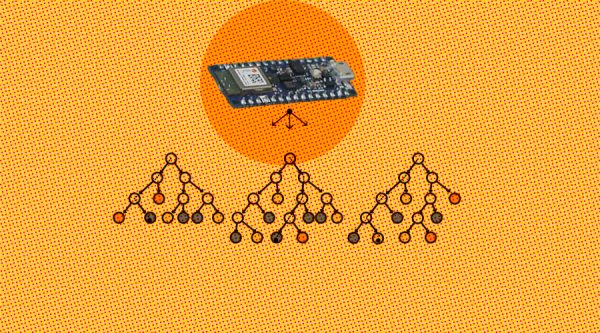

The microcontroller can train ML models:

Microcontrollers, miniature computers that can run simple commands, are the basis for billions of connected devices, from IoT devices to sensors in automobiles. The intelligent algorithms and framework the researchers developed to reduce the amount of computation required to train a machine-learning model, which makes the process faster and more memory efficient. However, the training process requires so much memory that it is typically done using powerful computers at a data center before the model is deployed on a device.

But cheap, low-power microcontrollers have extremely limited memory and no operating system, making it challenging to train AI models on edge devices. The low resource utilization makes deep learning more accessible and can have a broader reach, especially for low-power edge devices. Meanwhile, deep learning training systems like PyTorch and TensorFlow are often run on clusters of servers with gigabytes of memory at their disposal, and while there is edge deep learning inference frameworks, some of these lack support for the back-propagation to adjust the models.

The model researchers have developed can reduce the amount of computation required to train a model. Moreover, the framework preserves or improves the accuracy of the model when compared to other training approaches. Han and his collaborators employed two algorithmic solutions to make the training process more efficient and less memory-intensive. The researchers developed a system, called a tiny training engine, that can run these algorithmic innovations on a simple microcontroller that lacks an operating system.

Their study enables IoT devices to not only perform inference but also continuously update the AI models to newly collected data, paving the way for lifelong on-device learning. The research will be presented at the Conference on Neural Information Processing Systems. The algorithm works by freezing the weights one at a time until it detects the accuracy dip to a set threshold. This research from MIT has not only successfully demonstrated the capabilities but also opened up new possibilities for privacy-preserving device personalization in real-time.

Source: analyticsinsight.net