When applying the machine learning technique, which is referred to as unsupervised learning, training datasets are not used to supervise the development of machine learning models. Instead, the models themselves interpret the data that is presented to discover previously unknown patterns and insights. It is analogous to the way in which new information is processed in the human brain throughout the process of learning something new.

It focuses mostly on data that has not been labelled. It is possible to draw parallels between this and the process of learning, which takes place when a student solves a problem without the assistance of a teacher. Learning without supervision cannot be utilised to directly address problems involving regression or categorization. In a manner analogous to supervised machine learning, we do not have access to the input data together with the associated output label. The goal is to determine the underlying pattern of the dataset, organise the data into groups based on their commonalities, and express the dataset in a manner that is as exact as possible.

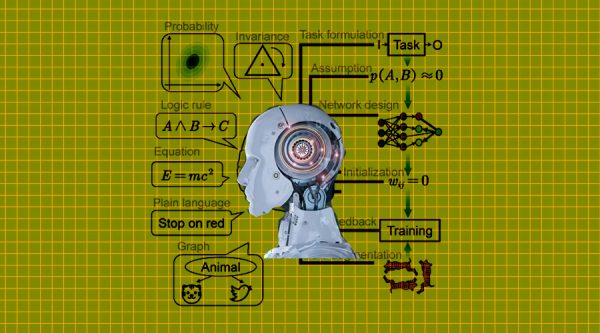

Here are top 10 unsupervised machine learning models and algorithms.

The Gaussian mixture model is a probabilistic model that assumes that all of the data points were formed by blending a restricted number of Gaussian distributions with unknowable parameters. The number of Gaussian distributions used in the model is limited to a certain number.

Increase in the frequency of recurrent patterns Models make use of algorithms that enable the detection of recurring patterns without the development of candidates. It builds an FP Tree rather than applying Apriori’s produce and test technique to solve the problem.

Clustering based on K-means – The K-Means Clustering method employs this Unsupervised Learning approach to data analysis. It divides the dataset without labels into a few distinct categories. The unlabeled dataset is partitioned into K clusters several times throughout the algorithm. Each dataset can only be placed in one of the groups that has traits in common with it. Because of this, we are able to organise the data into a variety of categories. It is a helpful method for locating the groups’ categories in the dataset that has been provided without the need for training.

Hierarchical clustering is also referred to as hierarchical cluster analysis. Hierarchical clustering goes by both of these names. It is a method for grouping data without any human supervision. Creating clusters that are originally organised from highest to lowest is a required step in this process.

Anomaly Detection – Anomaly detection is particularly beneficial in training circumstances where we have a choice of regular data instances to choose from. By allowing the machine to get somewhat close to the population that is being modelled, it is possible to build an accurate model of normality.

Principal Component Analysis is a statistical method that, by employing orthogonal transformation, changes the observations of correlated qualities into a set of linearly uncorrelated components. This is accomplished by the use of orthogonal transformation. These recently modified features are known as the Principal Components, and they are what give this machine learning method its reputation as one of the most popular ones.

It makes use of databases that are capable of storing transactional data. Apriori Algorithm. The association rule determines how strong of a connection there is between the two objects being compared. In this methodology, the associations for the itemset are decided upon by doing a search in breadth-first order. It provides assistance in the process of locating common item sets within a massive dataset.

KNN stands for “k-nearest neighbours,” and it is the process by which a new data point is categorised using the K-NN algorithm based on how similarly it compares to previously recorded data. This suggests that any new data that appears can be seen without any difficulty.

Neural Networks: Given that a neural network may provide an approximation for any function, it is theoretically possible to employ one to acquire knowledge of any function.

The assumption of a non-Gaussian signal distribution is what makes this method, known as independent component analysis, function. It permits the separation of a mixture of signals into their individual origins by identifying the non-Gaussian signal distribution.

In conclusion, the most significant disadvantage of unsupervised learning is that it is impossible to obtain accurate information regarding the classification of data. On the other hand, this learning enables you to discover a wide variety of previously unknown patterns in data. Learning the algorithms that are utilised in models is essential because these algorithms are unsupervised and need to be comprehended.