Investors in AI-first technology companies serving the defense industry, such as Palantir, Primer and Anduril, are doing well. Anduril, for one, reached a valuation of over $4 billion in less than four years. Many other companies that build general-purpose, AI-first technologies — such as image labeling — receive large (undisclosed) portions of their revenue from the defense industry.

Investors in AI-first technology companies that aren’t even intended to serve the defense industry often find that these firms eventually (and sometimes inadvertently) help other powerful institutions, such as police forces, municipal agencies and media companies, prosecute their duties.

Most do a lot of good work, such as DataRobot helping agencies understand the spread of COVID, HASH running simulations of vaccine distribution or Lilt making school communications available to immigrant parents in a U.S. school district.

However, there are also some less positive examples — technology made by Israeli cyber-intelligence firm NSO was used to hack 37 smartphones belonging to journalists, human-rights activists, business executives and the fiancée of murdered Saudi journalist Jamal Khashoggi, according to a report by The Washington Post and 16 media partners. The report claims the phones were on a list of over 50,000 numbers based in countries that surveil their citizens and are known to have hired the services of the Israeli firm.

Investors in these companies may now be asked challenging questions by other founders, limited partners and governments about whether the technology is too powerful, enables too much or is applied too broadly. These are questions of degree, but are sometimes not even asked upon making an investment.

I’ve had the privilege of talking to a lot of people with lots of perspectives — CEOs of big companies, founders of (currently!) small companies and politicians — since publishing “The AI-First Company” and investing in such firms for the better part of a decade. I’ve been getting one important question over and over again: How do investors ensure that the startups in which they invest responsibly apply AI?

Let’s be frank: It’s easy for startup investors to hand-wave away such an important question by saying something like, “It’s so hard to tell when we invest.” Startups are nascent forms of something to come. However, AI-first startups are working with something powerful from day one: Tools that allow leverage far beyond our physical, intellectual and temporal reach.

AI not only gives people the ability to put their hands around heavier objects (robots) or get their heads around more data (analytics), it also gives them the ability to bend their minds around time (predictions). When people can make predictions and learn as they play out, they can learn fast. When people can learn fast, they can act fast.

Like any tool, one can use these tools for good or for bad. You can use a rock to build a house or you can throw it at someone. You can use gunpowder for beautiful fireworks or firing bullets.

Substantially similar, AI-based computer vision models can be used to figure out the moves of a dance group or a terrorist group. AI-powered drones can aim a camera at us while going off ski jumps, but they can also aim a gun at us.

This article covers the basics, metrics and politics of responsibly investing in AI-first companies.

The basics

Investors in and board members of AI-first companies must take at least partial responsibility for the decisions of the companies in which they invest.

Investors influence founders, whether they intend to or not. Founders constantly ask investors about what products to build, which customers to approach and which deals to execute. They do this to learn and improve their chances of winning. They also do this, in part, to keep investors engaged and informed because they may be a valuable source of capital.

Investors can think that they’re operating in an entirely Socratic way, as a sounding board for founders, but the reality is that they influence key decisions even by just asking questions, let alone giving specific advice on what to build, how to sell it and how much to charge. This is why investors need their own framework for responsibly investing in AI, lest they influence a bad outcome.

Board members have input on key strategic decisions — legally and practically. Board meetings are where key product, pricing and packaging decisions are made. Some of these decisions affect how the core technology is used — for example, whether to grant exclusive licenses to governments, set up foreign subsidiaries or get personal security clearances. This is why board members need their own framework for responsibly investing in AI.

The metrics

The first step in taking responsibility is knowing what on earth is going on. It’s easy for startup investors to shrug off the need to know what’s going on inside AI-based models. Testing the code to see if it works before sending it off to a customer site is sufficient for many software investors.

However, AI-first products constantly adapt, evolve and spawn new data. Some consider monitoring AI so hard as to be basically impossible. However, we can set up both metrics and management systems to monitor the effects of AI-first products.

We can use hard metrics to figure out if a startup’s AI-based system is working at all or if it’s getting out of control. The right metrics to use depend on the type of modeling technique, the data used to train the model and the intended effect of using the prediction. For example, when the goal is hitting a target, one can measure true/false positive/negative rates.

Sensitivity and specificity may also be useful in healthcare applications to get some clues as to the efficacy of a diagnostic product: Does it detect enough diseases enough of the time to warrant the cost and pain of the diagnostic process? The book has an explanation of these metrics and a list of metrics to consider putting in place.

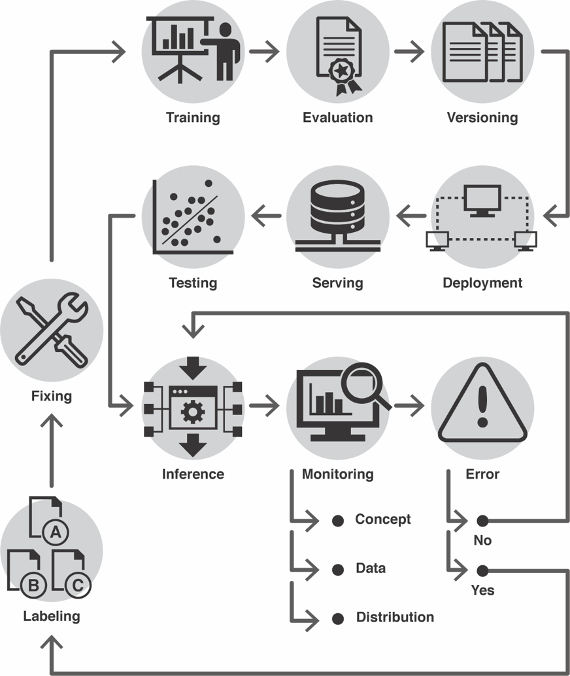

We can also implement a machine learning management loop that catches models before they drift away from reality. “Drift” is when the model is trained on data that is different from the currently observed data and is measured by comparing the distributions of those two data sets. Measuring model drift regularly is imperative, given that the world changes gradually, suddenly and often.

We can measure gradual changes only if we receive metrics over time, sudden changes can be measured only if we get metrics close to real time, and regular changes are measurable only if we accumulate metrics at the same intervals. The following schematic shows some of the steps involved in a machine learning management loop so that we can realize that it’s important to constantly and consistently measure the same things at every step of the process of building, testing, deploying and using models.

Image Credits: Ash Fontana

The issue of bias in AI is a problem both ethical and technical. We deal with the technical part here and summarize management of machine bias by treating it in the same way we often manage human bias: With hard constraints. Setting constraints on what the model can predict, who accesses those predictions, limits on feedback data, acceptable uses of the predictions and more requires effort when designing the system but ensures appropriate alerting.

Additionally, setting standards for training data can increase the likelihood of it considering a wide range of inputs. Speaking to the designer of the model is the best way to reach an understanding of the risks of any bias inherent in their approach. Consider automatic actions such as shutting down or alerting after setting these constraints.

The politics

Helping powerful institutions by giving them powerful tools is often interpreted as direct support of the political parties that put them into power. Alignment is often assumed — rightly or wrongly — and carries consequences. Team members, customers and potential investors aligned with different political parties may not want to work with you. Media may target you. This is to be expected and thus expressed internally as an explicit choice as to whether to work with such institutions.

The primary, most direct political issues arise for investors when companies do work for the military. We’ve seen large companies such as Google face employee strikes over the mere potential of taking on military contracts.

Secondary political issues such as personal privacy are more a question of degree in terms of whether they catalyze pressure to limit the use of AI. For example, when civil liberties groups target applications that may encroach on a person’s privacy, investors may have to consider restrictions on the use of those applications.

Tertiary political issues are generally industrial, such as how AI may affect the way we work. These are hard for investors to manage, because the impact on society is often unknowable on the timeline over which politicians can operate, i.e., a few years.

Responsible investors will constantly consider all three areas — military, privacy and industry — of political concern, and set the internal policy-making agenda — short, medium and long term — according to the proximity of the political risk.

Arguably, AI-first companies that want to bring about peace in our world may take the view that they eventually will have to “pick a side” to empower. This is a strong point of view to take, but one that’s justified by certain (mostly utilitarian) views on violence.

In conclusion

The responsibilities of AI-first investors run deep, and rarely do investors in this field know how deep when they’re just getting started, often failing to fully appreciate the potential impact of their work. Perhaps the solution is to develop a strong ethical framework to consistently apply across all investments.

I haven’t delved into ethical frameworks because, well, they take tomes to properly consider, a lifetime to construct for oneself and what feels like a lifetime to construct for companies. Suffice to say, my belief is that philosophers could be better utilized in AI-first companies in developing such frameworks.

Until then, investors that are aware of the basics, metrics and politics will hopefully be a good influence on the builders of this most powerful technology.

Source: techcrunch.com