TinyML has become quite popular in the tech community owing to its growth opportunities

The world is getting a whole lot smarter with the constant evolution of the technology landscape. Emerging technology trends possess the potential to transform avant-garde business processes. With the integration of artificial intelligence and machine learning, the tech industry is about to unlock multi-billion dollar market prospects. One of these prospects is TinyML, which is basically the art and science of producing machine learning models robust enough to function in the end, which is why it is witnessing growing popularity in the business domains. TinyML has made it possible for machine learning models to be deployed at the edge.

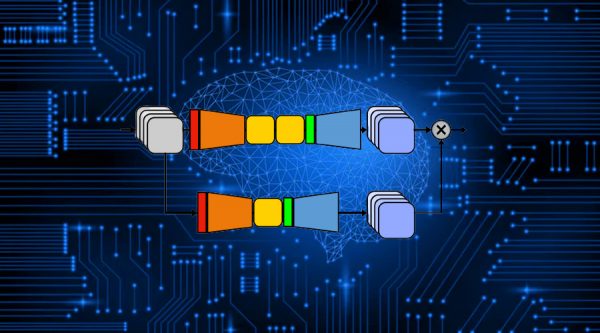

So, what actually is TinyML? Experts believe that it can be broadly defined as a fast-growing field of ML technologies and applications including hardware, algorithms, and software that are capable of performing on-device sensor data. Recently, researchers, after witnessing the growing interest in TinyML have decided to exceed their capabilities. The innovation is titled to be self-attention for TinyML, which has eventually become one of the key components in several deep learning architectures. In a new research paper, scientists from the University of Waterloo and DarwinAI have introduced a new deep learning architecture that will bring highly efficient self-attention to TinyML. This process is basically known as the double-condensing attention condenser. The architecture is designed to build based on the old research theories that the team has done and is currently promising for edge AI applications.

How is self-attention in TinyML affecting modern AI models?

Typically, deep neural network models are designed to process one single piece of data at a time. But, in several other applications, the network is processed altogether, automatically, and then the relations between the sequence of input data is being checked. Hence, self-attention aims to address such issues. It is one of the most efficient and successful mechanisms for addressing relations between sequential data. Self-attention is generally used in transformers, which is a deep learning architecture that operates behind popular LLMs like GPT-3 and OPT-175B, but it is estimated to be very useful for TinyML applications as well.

Experts say that there is a big potential that encompasses the field of machine learning and TinyML. The recent innovations in TinyML are enabling machines to run increasingly complex deep learning models directly through microcontrollers. This new technology has made it possible to run these models on the existing microcontroller hardware. This branch of machine learning represents a collaborative effort between the embedded ultra-low power systems and ML communities that have traditionally operated independently.

In the paper, the scientists also included TinySpeech, which is a neural network that uses attention condensers for speech recognition. Attention condensers demonstrated great success across manufacturing, automotive, and healthcare applications, whereas traditional machines had various limits.

Source: analyticsinsight.net